Next: Objective of this thesis

Up: Diplomarbeit

Previous: Contents

Introduction and Overview

Wie fang’ ich nach der Regel an,

Ihr stellt sie selbst, und folgt ihr dann.

(Richard Wagner, “Die Meistersinger”)

When a camera takes a shot from a scene, the light-rays reflected at the objects inside the scene form an object on the sensor chip of the camera. During this transformation the 3D information gets lost. However, the human visual system is equipped with two eyes, each eye taking a view of the same area from a slightly different angle. The benefit of a stereo system is best voiced by Rachel Cooper in the following lines:

“Both images have plenty in common but contains information the other doesn’t. The mind combines the two images, finds similarities and adds in the small differences. The small differences between the two images add up to a big difference in the final picture. The combined image is more than the sum of its parts. It is a three-dimensional stereo picture.”1.1

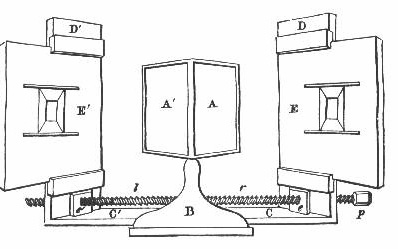

Leonardo da Vinci (1452 – 1519) was the first to describe binocular parallaxes [GR76]. Charles Wheatstone was the first who used a two camera system to gain stereo images. These images could then be viewed through a stereoscope, which he invented in the year 1838. Figure 1.1 shows an illustration of his construction.

|

The occurring displacement between two corresponding image points seen by both eyes is used by the human brain to determine its 3D position relative to the viewer. This information is very useful, for example during interaction with objects of the real world. Especially when the actor has to deal with moving objects (e.g. soccer, baseball,..) 3D information is crucial.

So far a lot of sensors have been used to gain information about the periphery. In principle, one can distinguish between two groups of sensors: active and passive ones. Remote sensing systems which measure energy that is naturally available are called passive sensors. For example the sun provides a convenient source of energy for remote sensing. The emitted energy is either reflected, or absorbed and then re-emitted, as it is for thermal infrared wavelengths. Reflected energy is only available if a light source (or another wave emitting source) exists, thus can not be used during night (if no source is available). Energy that is naturally emitted (such as thermal infrared) can be detected at all time, as long as the amount of incoming energy is sufficient.

Active sensors, on the other hand, like infrared, ultrasonic, structured light or laser range scanners, provide their own source of energy. The sensor emits waves with a special wavelength in a given direction. The reflected waves are then investigated. The easiest form of an active sensor is an infrared bump sensor, which just measures if light is reflected or not, thus is not able to determine the distance of the object in relation to the sensor. An infrared distance sensor sends out infrared light and measures the position of the reflected light at a CCD chip and uses triangulation to get a 3D point, or the phase difference between the reflected light and the outgoing light is measured. Ultrasonic sensors send out ultrasonic signals and measure the time the signal needs to be reflected. This kind of information can be also used as distance information due to known speed of the signal. Regrettably are both methods unable to determine the exact position of the object which reflects the signal. Structured light uses the deformation of a projected pattern to gain information about the geometry or uses triangulation. It needs a sender which emits the light and a camera or other kind of receiver (depending on wavelength).

The eyes of a human being are passive sensors, like cameras. Cameras have lenses which project the light onto a sensor chip. The result is a 2D grayscale or color image. If two cameras are used which are positioned at different places but have an overlapping field of view, one can compute the 3D point of any point in one image if the corresponding point in the other image is found and the geometry between the cameras is known (i.e. they are calibrated). The search for corresponding points is one of the main problems in stereo vision and called correspondence analysis.

Next: Objective of this thesis

Up: Diplomarbeit